In a previous post, I pointed out that the move to “application level” transports enables transport innovation and bypasses the traditional gatekeepers, the OS developers. The one big fear is that applications would seek greater performance through selfish innovation, and that the combined effect of many large applications doing that could destabilize the Internet. The proposed solution is to have networks manage traffic, so each user gets a fair share. But the big question is whether this kind of traffic management can in fact be deployed on the current Internet.

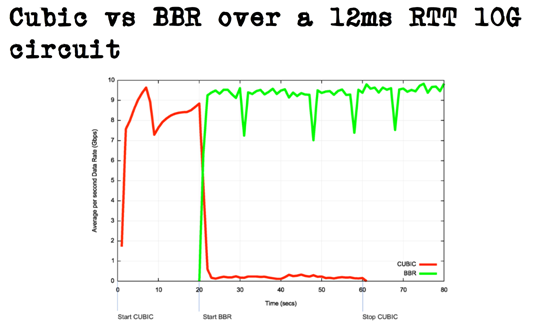

Let’s not forget that innovation is generally a good thing. The congestion control algorithms currently used are certainly not the best that we can think off, and in fact many experimental designs have demonstrated better latency, or better throughput, or both; this paper on a “protocol design contests” provides many examples. But innovation is not fair. For example, a protocol that tracks transmission delays will not behave the same way as a protocol that tracks packet losses, and it is very hard to tune them so that they compete fairly. This will be a problem if some applications feel penalized, and the competition could degenerate into a catastrophic race to the bottom. But if the network is designed to enforce fairness, then it can enable innovation without this major inconvenience. That’s why I personally care about traffic management, or active queue management.

Before answering whether the AQM algorithms can be deployed, let’s look at where we need them most. Congestion appears first at bottlenecks where the incoming traffic exceeds the available capacity. Bottlenecks happen on the customers’ downlinks, when too many devices try to download too much data simultaneously, or on customers’ uplinks, when applications like games, VPN and video chat compete. They happen on wireless links when the radio fades, or when too many users are using the same cell. They may happen on the backhaul networks that connect cell towers to the Internet. Due to large capacity optical fibers, it does not happen so often anymore on transoceanic links, but that still happens in some countries when the international connections are under provisioned. Is it possible to shape traffic at all these places?

In fact, these traffic management techniques are already used in many of these places. Take wireless networks. The wireless networks already manage bandwidth allocation, so that scarce resource can be fairly shared between competing users. If an application tries to send too much data, it will only manage to create queues and increase latency for that application’s user, without impacting other users too much. In that case, applications have a vested interest in using efficient mechanisms for congestion control. If they do, the application will appear much more responsive than if they don’t.

Traffic management is also used in cable networks, at least in those networks that abide by the DOCSIS specifications of CableLabs. Active queue management was added to the cable modem specifications with DOCSIS 3.1, published in 2014. There are also implementation of active queue management such as FQ-Codel for OpenWRT, the open source software for home routers. Clearly, deployment of AQM on simple devices is possible.

The edge of the network appears covered. But congestion might also happen in the middle of the network, for example in constrained backhaul networks, at interconnection points between networks, or on some international connections. The traffic at those places is handled by high performance routers, in which the forwarding of packet is handled by “data plane” modules optimized for speed. Programming AQM algorithms there is obviously harder than in general purpose processors, but some forms of control are plausible. Many of these routers support “traffic shaping” algorithms that resemble AQM algorithms, so there is certainly some hope.

But rather than speculating, it might be more useful to have a discussion between the developers of routers and those of end to end transport algorithms. How to we best unleash innovation while keeping the network stable? Hopefully, that discussion can happen at the IETF meeting in Prague, next March.